When Software-defined Storage Meets Software-defined Networking

Software-defined infrastructure has become the new standard for managing data centers, as it provides flexibility and agility for platform operators to customize hardware resources for software systems and applications. As the backbone technology, software-defined networking (SDN) allows network operators to configure and manage network resources through programmable switches. Since SDN has demonstrated its benefits, software-defined storage (SDS) has also been developed. A typical example is software-defined flash (SDF). Similar to SDN, SDF enables upper-level software to man- age the low-level flash chips for improved performance and resource utilization. Since the cost of flash chips has been dramatically decreased while offering orders of magnitude better performance than conventional hard disk drives (HDDs), they are becoming the mainstream choice in large-scale data centers. Naturally, SDF has been under intensive study and wide deployment.

Both SDN and SDF have their own control plane and data plane, and provide programmability for developers to define their policies for resource management and scheduling. However, SDN and SDF are managed separately in modern data centers. At first glance, this is reasonable by following the rack-scale hierarchical design principles. However, it suffers from suboptimal end-to-end performance, due to the lack of coordination between SDN and SDF. Although both SDN and SDS can make the best-effort at- tempts to achieve their quality of service, they do not share their states and lack global information for storage manage- ment and scheduling, making it challenging for applications to achieve predictable end-to-end performance. Prior studides have proposed various software techniques such as token bucket and virtual cost for enforcing performance isolation across the rack-scale storage stack. However, they treat the underlying SSDs as a black box, and cannot capture their hardware events, such as garbage collection (GC) and I/O scheduling in the storage stack. Thus, it is still hard to achieve predictable performance across the entire rack.

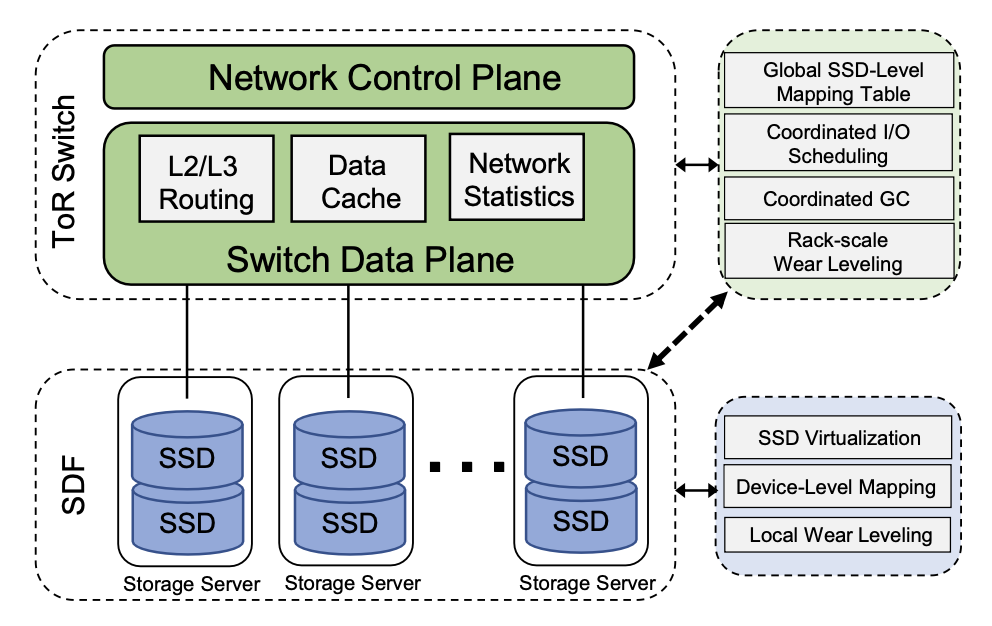

We develop a new software-defined architecture, named RackBlox, to exploit the capabilities of SDN and SDF in a coordinated fashion. As both SDN and SDF share a similar architecture: the control plane is responsible for managing the programmable devices, and the data plane is responsible for processing I/O requests, naturally, we can integrate and co-design both SDN and SDF, and redefine their functions to improve the efficiency of the entire rack-scale storage system. RackBlox does not require new hardware changes, as both SDN and SDF today have offered the flexibility to redefine the functions of their data planes.

RackBlox decouples the storage management functions of flash-based solid-state drives (SSDs), and allow the SDN to track and manage the states of SSDs in a rack. Therefore, we can enable the state sharing between SDN and SDF, and facilitate global storage resource management. With this capability, RackBlox enables (1) coordinated I/O scheduling, in which it dynamically adjusts the I/O scheduling in the storage stack with the measured and predicted network latency, such that it can coordinate the effort of I/O scheduling across the network and storage stack for achieving predictable end- to-end performance; (2) coordinated garbage collection (GC), in which it will coordinate the GC activities across the SSDs in a rack to minimize their impact on incoming I/O requests; (3) rack-scale wear leveling, in which it enables global wear leveling among SSDs in a rack by periodically swapping data, for achieving improved device lifetime for the entire rack.

We implement RackBlox with a programmable Tofino switch and programmable SSDs (i.e., open-channel SSDs). We evaluate RackBlox with network traces collected from various data centers and a variety of data-intensive applications. Our experiments show that RackBlox reduces the tail latency of end-to-end I/O requests by up to 5.8x, and can achieve a uniform lifetime for a rack of SSDs without introducing much additional performance overhead. To learn the details of this project, please check our recent publications.

- RackBlox: A Software-Defined Rack-Scale Storage System with Network-Storage Co-Design

Benjamin Reidys, Yuqi Xue, Daixuan Li, Bharat Sukhwani, Wen-mei Hwu, Deming Chen, Sameh Asaad, Jian Huang

To Appear in the 29th ACM Symposium on Operating Systems Principles (SOSP'23)

- Building Next-Generation Software-Defined Data Centers with Network-Storage Co-design

Benjamin Reidys, Jian Huang

The 1st Workshop on Hot Topics in System Infrastructure (HotInfra'23)